Quick Answer: Setting AI policies for legal teams and business users is a structured process that establishes clear guidelines for when, how, and by whom AI tools can be used within an organization. Effective AI policies address data security, attorney-client privilege protection, regulatory compliance, and risk management while enabling teams to leverage AI capabilities confidently. Organizations need these policies to prevent inadvertent data exposure, maintain professional ethical standards, and align with emerging regulations like the EU AI Act.

Key Takeaways

- AI policies protect organizations from regulatory penalties that can reach €35 million or 7% of global turnover under the EU AI Act for high-risk system violations, making comprehensive governance frameworks essential rather than optional.

- Attorney-client privilege can be waived by inputting confidential information into public AI systems, requiring specific policies that distinguish between approved enterprise tools and prohibited public platforms for legal work.

- Organizations with formal AI governance report 60% fewer data security incidents and 45% faster AI adoption rates compared to those without structured policies, demonstrating that good governance accelerates rather than inhibits innovation.

- The EU AI Act phases in obligations between February 2025 and August 2027, requiring organizations to implement risk assessments, transparency measures, and human oversight for high-risk AI applications on specific timelines.

- Cross-functional AI policies that cover legal, procurement, HR, and sales teams prevent the creation of governance silos where different departments use incompatible tools or contradictory standards, creating organizational risk and inefficiency.

Executive Summary

The integration of AI into legal and business operations has moved from experimental to essential. As organizations adopt AI tools for contract analysis, due diligence, and compliance monitoring, the need for comprehensive AI governance has become critical. This guide provides practical steps for establishing AI policies that protect your organization while enabling innovation.

Setting AI policies is not about restricting technology use. It is about creating guardrails that enable teams to leverage AI confidently. With regulatory frameworks like the EU AI Act now active and professional bodies issuing guidance on AI use in legal practice, organizations need clear policies that address both compliance requirements and practical operational needs.

This guide covers the essential components of AI policy development, from initial risk assessment through implementation and monitoring. You will find actionable frameworks, compliance considerations, and practical examples that can be adapted to your organization’s specific needs.

Why AI Policies Are Essential for Legal and Business Users?

AI policies are essential because they establish the framework for responsible technology adoption while protecting organizations from legal, ethical, and operational risks that emerge when AI tools are used without appropriate oversight.

The regulatory landscape for AI is rapidly taking shape. The EU AI Act entered into force in August 2024, and will be fully applicable on August 2, 2027, establishing specific obligations for high-risk AI applications. The American Bar Association Standing Committee on Ethics and Professional Responsibility released ABA Formal Opinion 512 in July 2024, emphasizing the duty of competence and confidentiality when using AI tools. Similarly, the UK’s Solicitors Regulation Authority has discussed AI use and potential in multiple reports, addressing AI-assisted legal work through their Risk Outlook reports.

Beyond regulatory compliance, AI policies address critical operational risks. Without clear guidelines, legal teams risk inadvertently waiving attorney-client privilege by inputting sensitive information into public AI systems. Data leaks through unsecured AI platforms can expose confidential client information or trade secrets. Model errors and hallucinations can lead to incorrect legal advice or flawed business decisions if outputs are not properly verified.

Business risks extend beyond legal concerns. Inconsistent AI usage across departments creates operational inefficiencies and potential compliance gaps. When procurement teams use different AI tools than legal departments, or when sales teams input customer data into unvetted systems, organizations face reputational damage and potential regulatory fines. A single data breach involving AI systems can trigger notification requirements under GDPR, CCPA, and sector-specific regulations.

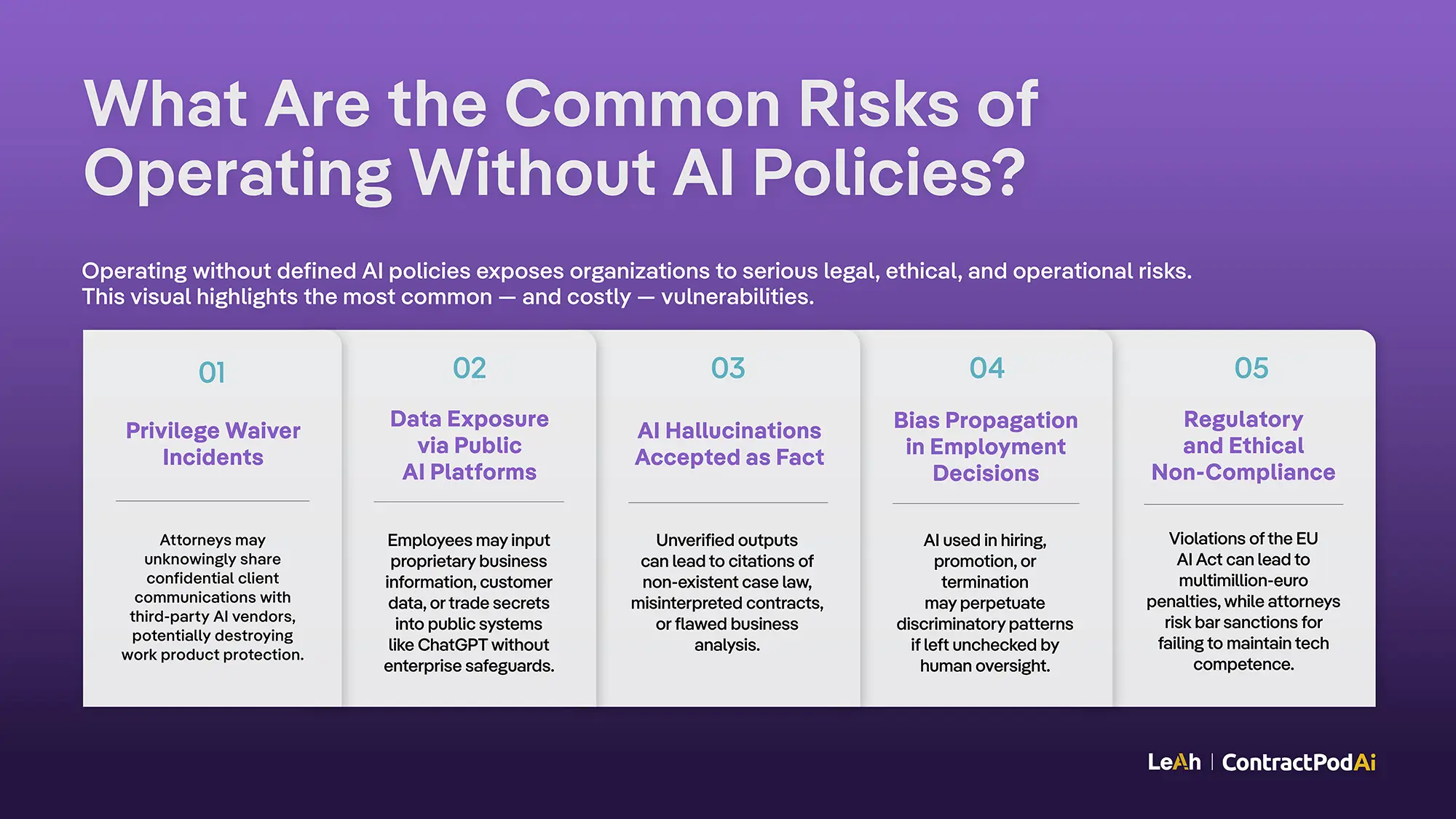

What Are the Common Risks of Operating Without AI Policies?

- Privilege waiver incidents where attorneys unknowingly share confidential client communications with third-party AI vendors, potentially destroying work product protection

- Data exposure through public AI platforms when employees input proprietary business information, customer data, or trade secrets into systems like ChatGPT without enterprise agreements

- AI hallucinations accepted as fact leading to citations of non-existent case law, incorrect contract interpretations, or flawed business analysis when outputs are not verified

- Bias propagation in employment decisions where AI tools used in hiring, promotion, or termination processes perpetuate discriminatory patterns without human oversight

- Regulatory non-compliance penalties reaching millions of euros under the EU AI Act or professional sanctions from bar associations for failure to maintain competence with technology

What Are the Key Principles of an Effective AI Policy?

Effective AI policies rest on five foundational principles that balance innovation with risk management.

Transparency forms the cornerstone of responsible AI use. Teams need clarity on which AI tools are approved, what tasks they can perform, and how AI-generated content should be disclosed. This includes marking AI-assisted documents, noting when AI tools have been used in analysis, and maintaining clear records of AI involvement in decision-making processes. Organizations should publish an approved AI tools list accessible to all employees, updated quarterly as new tools are vetted or restrictions change.

Accountability establishes clear ownership structures. Each AI implementation needs a designated owner responsible for compliance, performance monitoring, and incident response. This extends to approval workflows that specify who can authorize new AI tools, who reviews AI outputs for critical decisions, and who manages vendor relationships for AI services. When something goes wrong with an AI system, accountability principles ensure there is no confusion about who must respond and remedy the situation.

Security protocols protect sensitive data throughout the AI lifecycle. This includes encryption requirements for data in transit and at rest, restrictions on data residency for cloud-based AI services, and access controls that limit who can use AI tools containing confidential information. Organizations must also address model security, preventing unauthorized access to proprietary AI systems or training data. Security considerations should extend to vendor assessments, ensuring AI providers maintain appropriate certifications like SOC 2 Type II or ISO 27001.

Human oversight keeps AI as a tool rather than a replacement for professional judgment. Critical decisions require human review, with clear standards for when AI recommendations can be accepted versus when additional verification is needed. This is particularly important in legal contexts where professional liability and ethical obligations require attorney involvement. Human oversight should be proportional to risk, with high-stakes decisions receiving more intensive review than routine tasks.

Auditability creates accountability through comprehensive logging. Organizations need records of what AI tools were used, what inputs were provided, what outputs were generated, and how those outputs influenced decisions. This audit trail becomes crucial for regulatory compliance, quality assurance, and potential litigation discovery. Audit logs should be immutable, retained according to records retention schedules, and accessible to compliance teams and auditors when needed.

How Can Organizations Implement These Principles?

Organizations should create a policy framework that explicitly addresses each principle with concrete requirements. Transparency requirements might mandate that all AI-generated contracts include a disclosure statement. Accountability might require every AI tool to have a named business owner and technical administrator. Security could mandate that only enterprise AI tools with appropriate data processing agreements can access confidential information. Human oversight might specify that AI contract analysis must be reviewed by an attorney before execution. Auditability could require monthly reports of AI tool usage by department and task type.

How Can Legal Teams Draft an AI Policy?

Creating an AI policy for legal teams requires careful consideration of professional obligations alongside practical workflow needs. An AI policy for legal teams is a governance document that specifies which AI technologies lawyers can use, under what circumstances, and with what safeguards to maintain professional ethical standards and protect client interests.

Step 1: Define scope with precision. Identify which AI tools your legal team currently uses or plans to adopt. This might include contract analysis platforms, legal research assistants, document automation tools, and e-discovery systems. For each tool, specify approved use cases. Contract review AI might be approved for initial analysis but not final approval. Legal research tools might be acceptable for case law identification but require human verification of citations. Create a tiered classification: approved without restrictions, approved with conditions, prohibited for specific data types, and completely prohibited.

Step 2: Risk classification helps prioritize oversight requirements. High-stakes tasks like litigation strategy, regulatory compliance assessments, and M&A due diligence require stricter controls than routine contract review or legal research. Create a matrix that maps AI tools to risk levels, with corresponding approval and review requirements for each category. For example, using AI to summarize a publicly filed court opinion might be low risk, while using AI to analyze confidential settlement terms would be high risk requiring additional safeguards.

Step 3: Data protection rules must distinguish between different types of information. Publicly available information like published case law carries minimal risk. Internal corporate data requires standard security measures. Client confidential information and attorney work product demand the highest protection levels. Your policy should specify which categories of data can be processed by which AI systems, with clear prohibitions on using public AI tools for sensitive information. Include specific examples: lawyers can use public AI to understand legal concepts but cannot input actual client facts or case strategies.

Step 4: Approval workflows create accountability while avoiding bottlenecks. For low-risk AI uses, department heads might have approval authority. High-risk applications might require legal operations committee review or general counsel sign-off. Include provisions for pilot programs that allow controlled testing of new AI tools before full deployment. Establish timeframes for approval decisions to prevent new tools from languishing in indefinite review. Consider creating an AI governance committee that meets monthly to review tool requests and policy updates.

Step 5: Privilege preservation requires specific guidelines. Attorneys must understand that inputting client communications into public AI systems can waive privilege. The policy should mandate use of enterprise AI tools with appropriate confidentiality agreements for any privileged material. Include requirements for privilege logs when AI tools access protected information and procedures for addressing inadvertent disclosure. Provide clear examples: using AI to draft a memo about a legal issue is different from using AI to analyze privileged client communications about that issue.

Step 6: Training and education confirms policy effectiveness. Initial rollout should include comprehensive training on policy requirements, approved tools, and risk identification. Ongoing education addresses new tools, regulatory updates, and lessons learned from incidents. Consider role-specific training that addresses the unique needs of different practice areas. Litigators need different AI guidance than transactional attorneys. Training should include practical scenarios: “You need to analyze 500 contracts quickly. What AI tools can you use and what safeguards must you implement?”

What Should a Legal Team AI Policy Include?

A comprehensive legal team AI policy should include these sections: purpose and scope, definitions of AI terms, approved tools list with use case specifications, data classification requirements, privilege protection rules, approval workflows, human review standards, incident reporting procedures, training requirements, and policy review schedule. The policy should reference relevant professional responsibility rules, such as ABA Model Rule 1.1 on competence and ABA Model Rule 1.6 on confidentiality.

How Should AI Policies Extend to Business Users?

While legal teams face specific professional obligations, business users across procurement, HR, sales, and other functions need tailored guidance for their AI use cases. AI policies for business users are operational guidelines that enable non-legal employees to use AI tools safely and effectively while maintaining data security, regulatory compliance, and alignment with organizational risk tolerance.

Procurement teams increasingly use AI for supplier risk assessment, contract negotiation support, and spend analysis. Their AI policies should address vendor data confidentiality, competitive intelligence handling, and integration with existing procurement systems. Safe use cases might include analyzing public supplier information or standardizing contract terms. Unsafe practices would include uploading competitor pricing data to public AI platforms or using AI to make final vendor selection decisions without human review. Procurement policies should specify when AI vendor risk assessments must be validated by compliance or legal teams before acting on recommendations.

HR departments leverage AI for resume screening, employee sentiment analysis, and workforce planning. Their policies must address employment law compliance, particularly regarding bias in hiring decisions. According to EEOC guidance, employers remain liable for discriminatory outcomes even when using AI tools. The policy should mandate human review of all employment decisions, regular bias audits of AI screening tools, and transparent communication with candidates about AI use in hiring processes. HR should document AI’s role in decisions to defend against potential discrimination claims.

Sales teams use AI for lead scoring, proposal generation, and customer insight analysis. Policies should protect customer data privacy, confirm accurate product representations, and maintain compliance with marketing regulations. Clear escalation paths help sales representatives identify when AI-generated content needs legal or compliance review before customer delivery. Sales policies should prohibit using AI to make claims about product capabilities that have not been verified by product management or legal teams.

Each department needs specific guidance on recognizing uncertain AI outputs. When an AI tool generates unexpected results, contradicts established knowledge, or produces recommendations with significant business impact, users need clear escalation procedures. This might include consulting subject matter experts, requesting additional human review, or engaging the legal team for risk assessment.

Practical Example: Procurement AI Use Case

Consider this scenario: A procurement team receives an AI-generated supplier risk assessment indicating potential financial instability. The policy should require:

- Human verification of the AI’s data sources to confirm they are current and relevant

- Consultation with finance teams to validate the analysis using internal financial assessment tools

- Engagement of legal counsel if contract modifications are recommended based on the assessment

- Documentation of the decision-making process showing how AI analysis informed but did not determine the final decision

- Regular reviews of AI supplier assessments against actual supplier performance to validate model accuracy

This structured approach ensures AI serves as a decision support tool rather than an unquestioned authority, maintaining human accountability for business outcomes.

How Should Organizations Align AI Policies with Regulations?

The regulatory framework for AI continues to evolve, with significant developments expected through 2027. Organizations must align their AI policies with applicable regulations while anticipating future requirements.

The EU AI Act establishes a risk-based approach to AI regulation. Prohibitions and AI literacy obligations entered into application from February 2025, the governance rules and the obligations for general-purpose AI models become applicable on August 2, 2025, and the rules for high-risk AI systems have an extended transition period until August 2, 2027 (EU AI Act Official Framework). Organizations operating in the EU need policies that address these phased requirements, including risk assessments, transparency obligations, and human oversight mandates.

High-risk AI systems under the EU AI Act include those used in employment decisions, creditworthiness assessment, law enforcement, and critical infrastructure management. Organizations using AI in these contexts must implement quality management systems, maintain detailed technical documentation, ensure human oversight, and meet accuracy and cybersecurity requirements. Penalties for non-compliance can reach €35 million or 7% of total worldwide annual turnover, whichever is higher.

US regulatory guidance varies by sector and state. The ABA Model Rules of Professional Conduct provide a framework for ethical legal practice in the United States. The competence duty includes technology competence through ABA Model Rule 1.1, comment 8, which states that “a lawyer should keep abreast of changes in the law and its practice, including the benefits and risks associated with relevant technology.” State bars have issued varying guidance on AI use, with some requiring disclosure of AI assistance to clients or courts.

Federal agencies have issued sector-specific AI guidance. The EEOC addresses AI in employment decisions, emphasizing that employers remain liable for discriminatory outcomes. The FTC warns about AI-related deceptive practices in marketing and consumer protection. Financial regulators address AI in risk management and compliance through guidance from the OCC, Federal Reserve, and CFPB.

UK regulations focus on sector-specific applications. The Information Commissioner’s Office addresses AI under existing data protection frameworks, emphasizing that GDPR principles apply to AI systems. The Financial Conduct Authority has issued guidance on AI in financial services. The Solicitors Regulation Authority emphasizes that solicitors need to remember that they are still accountable to clients for the services provided, whether or not external AI is used.

Industry standards provide additional frameworks. ISO/IEC 23053 establishes a conceptual framework and shared terminology for describing artificial intelligence systems that use machine learning. ISO/IEC 23894 addresses AI risk management. And ISO/IEC 42001 specifies requirements for establishing, implementing, maintaining, and continually improving an Artificial Intelligence Management System (AIMS) within organizations. While not legally mandatory, these standards provide recognized best practices that demonstrate due diligence in AI governance.

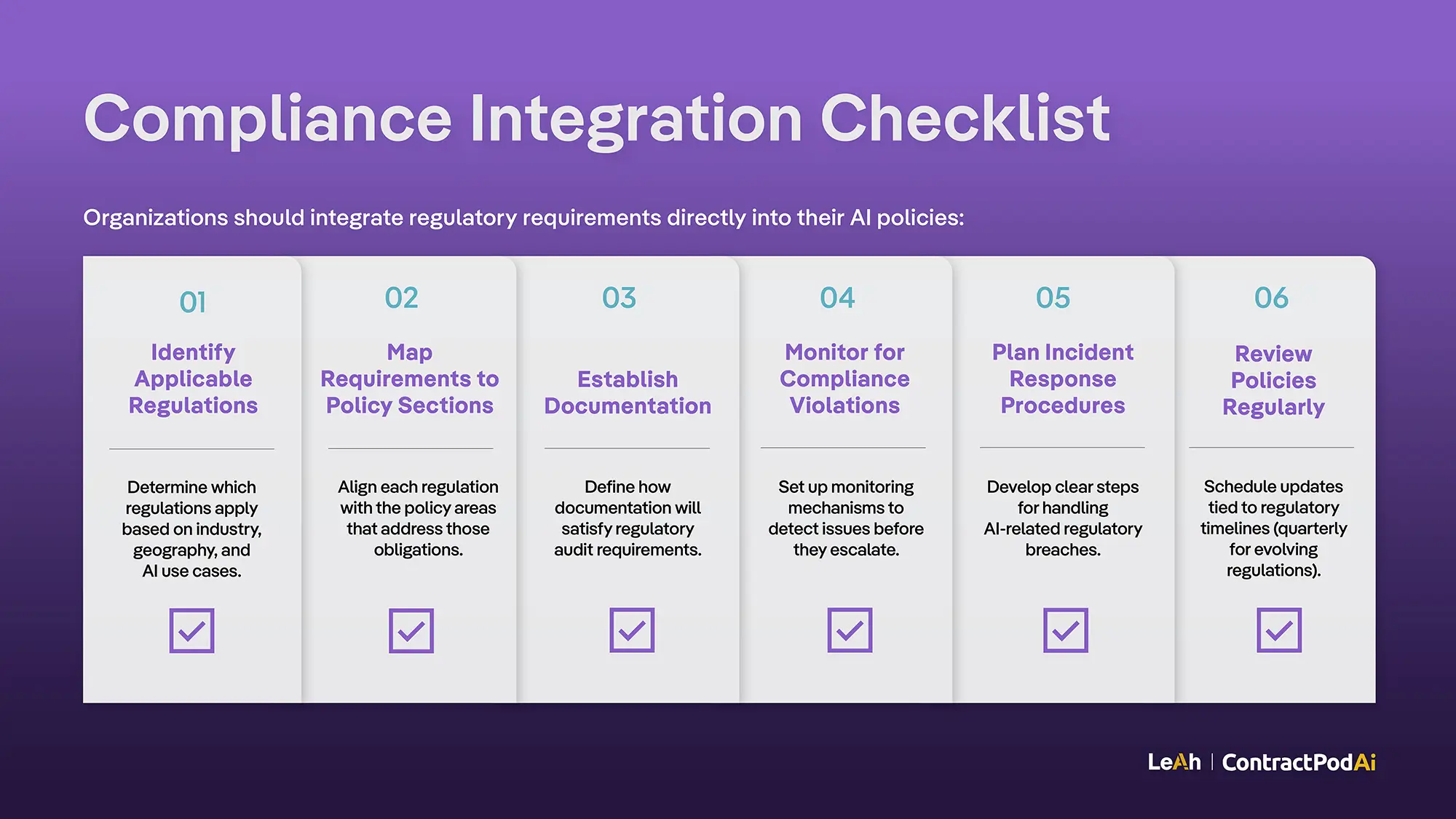

Compliance Integration Checklist:

Organizations should integrate regulatory requirements directly into their AI policies:

- Identify which regulations apply based on industry, geography, and AI use cases

- Map specific regulatory requirements to policy sections addressing those obligations

- Establish documentation standards that satisfy regulatory audit requirements

- Create monitoring mechanisms that detect potential compliance violations before they escalate

- Develop incident response procedures specifically for AI-related regulatory breaches

- Schedule regular policy reviews tied to regulatory implementation timelines (quarterly for rapidly evolving regulations)

Implementation Checklist

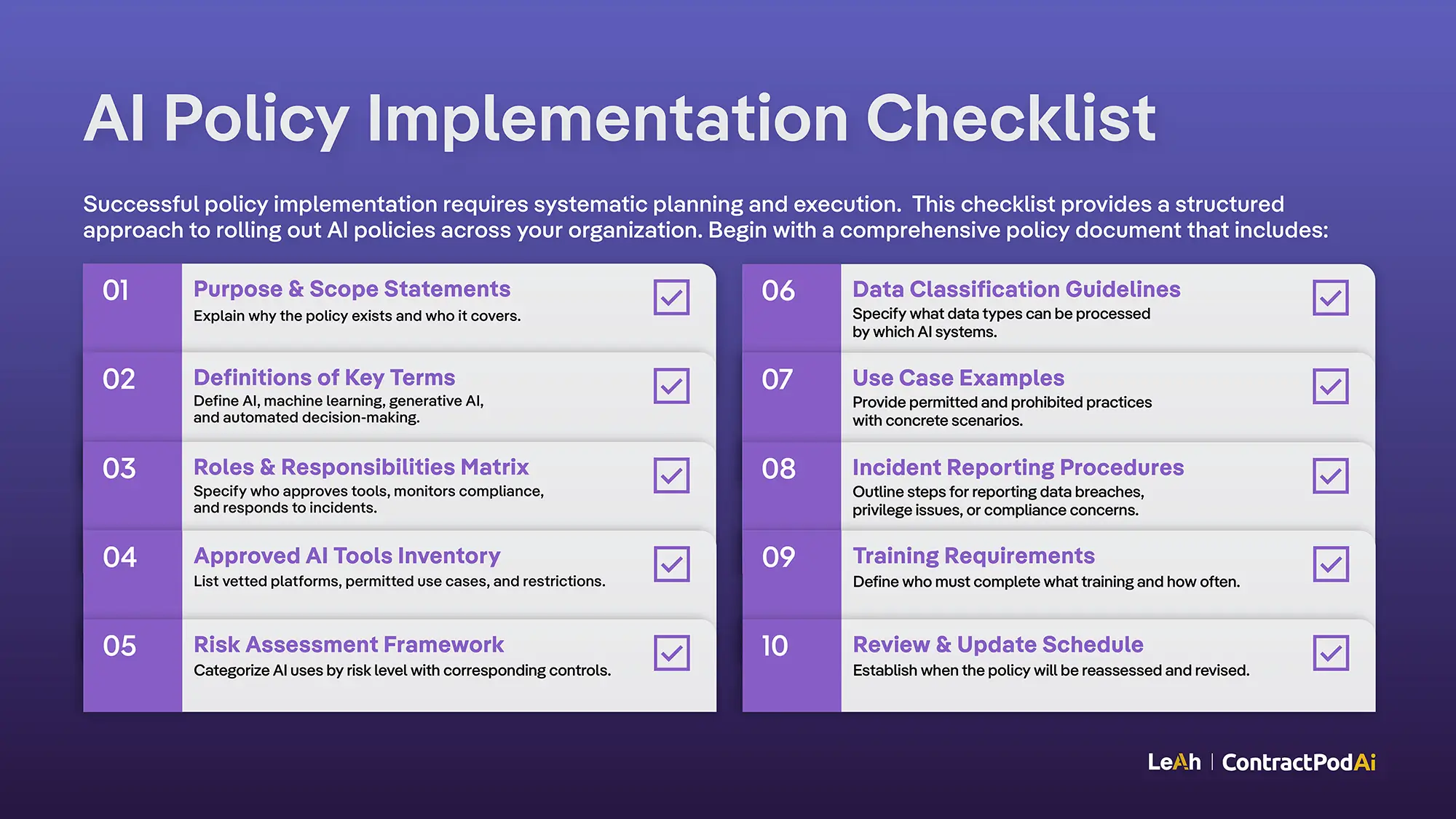

Successful policy implementation requires systematic planning and execution. This checklist provides a structured approach to rolling out AI policies across your organization.

Policy Document Template

Begin with a comprehensive policy document that includes:

- Purpose and scope statements explaining why the policy exists and who it covers

- Definitions of key terms such as AI, machine learning, generative AI, and automated decision-making

- Roles and responsibilities matrix specifying who approves tools, who monitors compliance, and who responds to incidents

- Approved AI tools inventory listing vetted platforms with their permitted use cases and restrictions

- Risk assessment framework categorizing AI uses by risk level and corresponding controls

- Data classification guidelines specifying what information categories can be processed by which AI systems

- Use case examples showing both permitted and prohibited practices with concrete scenarios

- Incident reporting procedures for data breaches, privilege concerns, or compliance issues

- Training requirements specifying who must complete what training and how often

- Review and update schedule establishing when the policy will be reassessed and revised

What Training Schedule Supports Policy Compliance?

Establish a training schedule that confirms comprehensive coverage:

- Initial policy rollout sessions for all affected teams, customized by role and department

- Tool-specific training when new AI systems are deployed, covering features and constraints

- Quarterly updates on regulatory changes and policy modifications affecting daily work

- Annual comprehensive reviews with competency assessments to validate understanding

- Ad-hoc sessions following incidents or significant changes in AI capabilities or regulations

Training should be interactive, including scenario-based exercises where employees make decisions about AI use and receive feedback on their choices. Track training completion and make it a prerequisite for accessing AI tools.

Monitoring and Review Schedule

Create a regular monitoring cadence to ensure policy effectiveness:

- Monthly reviews of AI tool usage logs to identify unauthorized tools or concerning patterns

- Quarterly audits of high-risk AI applications, validating that controls are functioning as intended

- Semi-annual policy effectiveness assessments gathering feedback from users about policy clarity and practical challenges

- Annual comprehensive policy reviews considering new technologies, regulatory developments, and organizational changes

- Continuous monitoring of regulatory developments through subscriptions to relevant legal and compliance sources

Monitoring should produce documented findings with action items assigned to specific owners and deadlines for resolution.

How Should Enforcement Be Handled?

Implement enforcement approaches that balance compliance with practicality:

- Technical controls that prevent unauthorized AI tool installation or block access to prohibited platforms through network security

- Access restrictions based on training completion, requiring employees to finish AI policy training before receiving credentials for approved tools

- Regular audits with documented findings shared with department heads and executives

- Clear consequences for policy violations, scaled to severity from warnings for minor infractions to termination for egregious breaches

- Recognition programs for exemplary AI governance, highlighting teams that use AI effectively while maintaining full compliance

Enforcement should be consistent and transparent, with violations addressed promptly to maintain policy credibility.

How Should Organizations Evolve Their AI Policies Over Time?

AI policies are living documents that must evolve with technology, regulations, and organizational needs. Regular reviews keep policies relevant and effective. Feedback mechanisms allow teams to report challenges and suggest improvements. Continuous monitoring of regulatory developments prevents compliance gaps.

Organizations should approach AI policy development as an iterative process. Start with core principles and controls, then expand based on experience and emerging needs. Pilot programs allow controlled experimentation with new AI tools before broader deployment. Cross-functional collaboration addresses diverse stakeholder needs while maintaining appropriate governance.

The goal is not to restrict AI adoption but to enable its responsible use. Well-designed policies give teams confidence to leverage AI’s capabilities while protecting the organization from risks. By establishing clear guidelines, providing comprehensive training, and maintaining oversight, organizations can realize AI’s benefits while meeting their legal and ethical obligations.

As AI technology continues advancing and regulatory frameworks solidify, organizations with mature AI governance will be best positioned to capitalize on opportunities while managing risks. The investment in comprehensive AI policies today creates the foundation for sustainable AI adoption tomorrow.

How Can Organizations Operationalize AI Policies?

Once your policy is established, consider how technology can support enforcement and monitoring. Contract management platforms like Leah can operationalize AI policies by providing controlled environments where legal and business teams use AI within policy-defined guardrails. These platforms offer audit trails, permission controls, and enterprise-grade security that align with your AI governance framework.

Organizations ready to move from policy documentation to operational implementation should assess how their existing legal technology stack supports or hinders AI policy compliance. Purpose-built AI tools designed for legal and contract workflows typically include built-in safeguards that make policy compliance automatic rather than requiring constant vigilance from users.

For guidance on implementing AI policies specific to your organization’s needs and industry requirements, contact ContractPodAi to discuss how purpose-built AI solutions can support your governance framework.